Launching a software product without a solid testing strategy is like sending a rocket into space without a system check. One small error can lead to massive failures, unhappy users, and damage to your brand.

Yet many teams still struggle with inconsistent testing, relying on ad-hoc methods that miss critical bugs and delay releases. Studies have shown that bugs found in production can cost up to 100 times more to fix than those caught in the early stages of development. Without a structured approach, it’s easy to overlook edge cases, waste resources on repetitive manual tests, or fail to scale testing with product growth.

That’s why adopting the right software testing strategy is essential. From test automation and shift-left testing to risk-based approaches, a well-defined strategy helps teams ensure quality, improve efficiency, and deliver software with confidence. In this guide, we’ll explore key strategies you can implement to level up your QA game.

What is test strategy in software testing?

A test strategy is an overarching document that defines the approach, objectives, scope, and resources for testing a software project. It serves as a blueprint to guide the testing process, ensuring the software meets quality standards and satisfies user requirements.

Benefits of test strategy

A well-constructed software test strategy offers numerous benefits, including:

- Clarity: Establishes clear direction and focus for all testing efforts.

- Risk management: Detects and addresses key risks early in the development cycle.

- Process optimization: Improves efficiency by optimizing processes, resources, and schedules.

- Regulatory compliance: Supports compliance with industry regulations and standards.

- Team alignment: Strengthens collaboration by aligning the team around shared objectives.

- Resource management: Enables smart and effective resource allocation.

- Progress tracking: Simplifies tracking and reporting of testing progress.

- Functional validation: Ensures thorough validation of all essential features and functions.

Types of software testing strategy

Here are the main types of software testing strategies commonly used to ensure quality and efficiency throughout the development lifecycle:

Static vs dynamic test strategy

| Static Test Strategy | Dynamic Test Strategy | |

| Definition | Involves reviewing code and documentation without executing the software | Executes the software to observe its behavior in real-world scenarios, ensuring it functions as expected |

| Activities |

|

|

| Benefits |

|

|

| Limitations |

|

|

| Best used |

In the early stages of the development lifecycle |

Throughout development, especially in later stages |

Preventive vs reactive test strategy

| Static Test Strategy | Dynamic Test Strategy | |

| Definition | Involves reviewing code and documentation without executing the software | Executes the software to observe its behavior in real-world scenarios, ensuring it functions as expected |

| Activities |

|

|

| Benefits |

|

|

| Limitations |

|

|

| Best used | In the early stages of the development lifecycle | Throughout development, especially in later stages |

Manual vs automatic test strategy

| Manual test strategy | Automatic test strategy | |

| Definition |

Testing where test cases are executed manually by human testers without automation tools |

Testing where scripts or tools automatically execute test cases without human intervention |

| Activities |

Writing test cases, manually executing steps, logging defects, exploratory testing, usability testing |

Writing automation scripts, setting up test frameworks, executing automated tests, maintaining scripts, integrating with CI/CD pipelines |

| Benefits |

|

|

| Limitations |

|

|

| Best used |

|

|

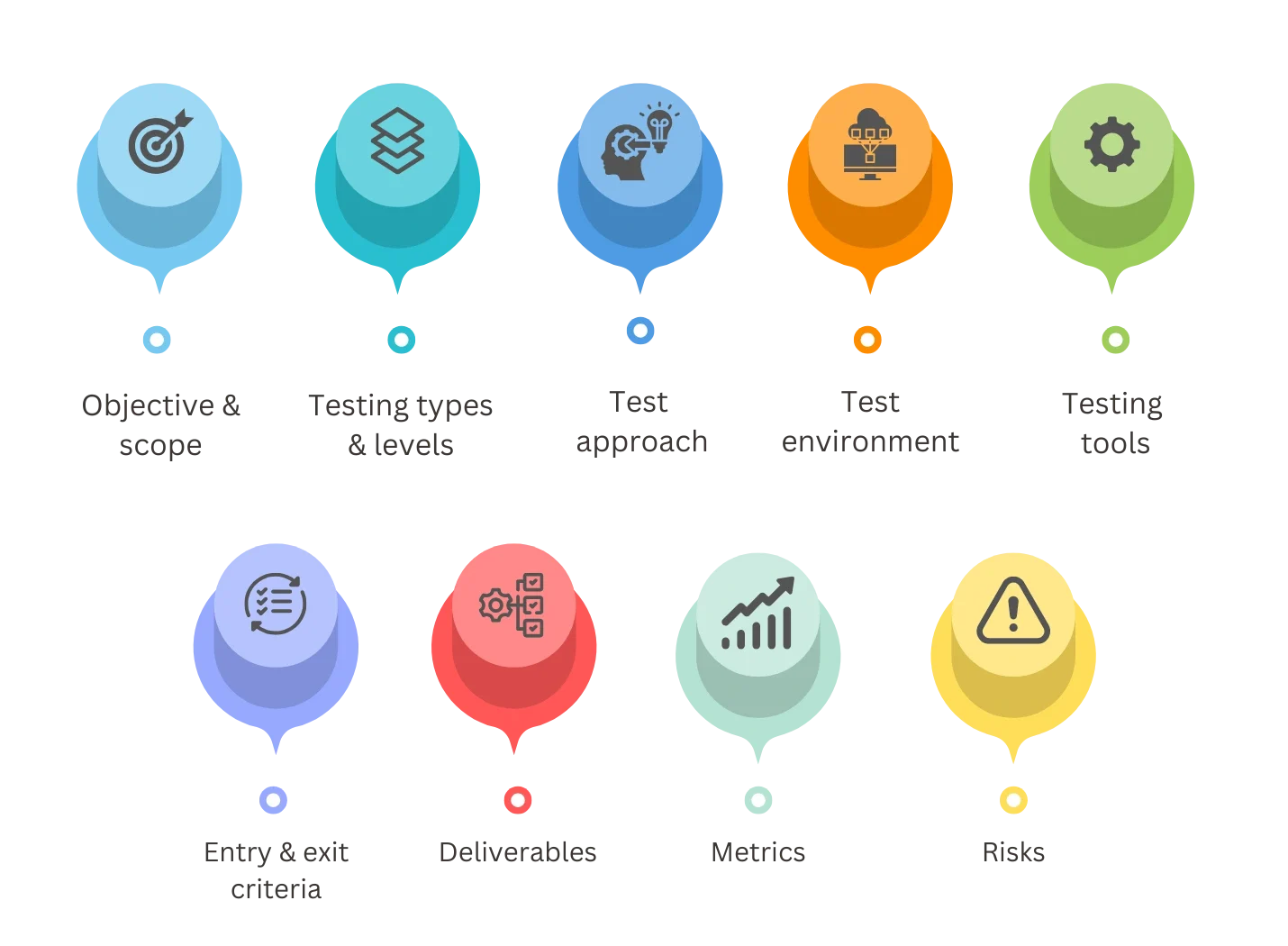

The 10 Must-Haves for an Effective Test Strategy

1. Objectives & scope

- Set objectives: Identify what needs to be validated, whether it’s functional aspects like features or non-functional elements such as security, performance, and usability. Make sure these objectives align with the project’s overall requirements.

- Define scope: Clearly specify what will and won’t be tested to prevent scope creep. This should cover:

- Features included in testing

- Features explicitly excluded

- Types of testing to be performed

- Details of the test environment

Prioritize critical areas:

- High-risk or heavily used features

- Key user journeys that impact engagement

- External integrations like APIs or payment gateways

- Recent updates or changes that are more likely to introduce bugs

2. Testing types & levels

Specify the different testing types to be used (e.g., functional, regression, performance, usability, security). Then break them down by level:

- Unit testing (base): Verifies individual components in isolation.

- Integration testing (middle): Checks data flow between connected units or services.

- End-to-End (E2E) testing (top): Simulates real-world scenarios from a user’s perspective.

3. Test approach

Outlines the overall methodology used to ensure software quality and reliability. Widely adopted model includes:

- Test pyramid: Emphasizes a high number of fast, automated unit tests; fewer integration tests; and minimal E2E tests for critical paths

- Risk-based testing: Focuses on high-impact and high-probability failure areas. Prioritizes critical features, business workflows, and areas prone to defects

- Shift-left testing: Involves QA early in the development lifecycle. Encourages early test design, collaboration with developers, and integration with CI/CD pipelines.

- Exploratory testing: Hands-on, unscripted testing that uncovers edge cases and usability issues. Complements automation by focusing on areas where unexpected behaviors may occur.

4. Test environment

The test environment is where the actual testing is executed. It should closely replicate the production environment to ensure accurate results. Additionally, it must include extra tools and configurations that support the testing process efficiently.

A test environment typically includes two core components:

Hardware

This covers the physical and virtual infrastructure required for testing, such as servers, desktop machines, mobile devices, routers, network switches, and firewalls, depending on the system architecture.

Software

This includes everything from operating systems, browsers, and databases to APIs, third-party services, testing frameworks, and any dependent software libraries.

For performance testing, it’s crucial to emulate real-world network conditions. This involves configuring elements such as network bandwidth limitations, latency simulation, proxy settings, firewalls, VPNs, and relevant network protocols.

An example of test environment look like:

| Category | Web testing | Mobile testing |

| Hardware |

– Windows 11: AMD Ryzen 7, 32GB RAM, 512GB SSD – macOS Ventura: Apple M2 Chip, 16GB RAM, 1TB SSD |

– iPhone 14 (iOS 16)

– Samsung Galaxy S22 (Android 13) – OnePlus 9 (Android 12) – iPad Mini (iOS 15) |

| Software |

– Google Chrome – Firefox – Safari (iOS) – Microsoft Edge – DuckDuckGo Browser – Vivaldi |

|

| Network |

– Use both cellular and Wi-Fi connections – Emulate variable network speeds: 3G to 5G |

|

| Database | PostgreSQL 14 | |

| CI/CD Setup | GitHub Actions or Azure DevOps | |

5. Testing tools

The section is used to specify the tools and frameworks to be used throughout the testing lifecycle. Depend on each project, the tool list will differ, ranging:

- Automation frameworks: Tools will be used to automate repetitive test cases, such as regression and smoke tests, for faster feedback and reduced manual effort. These may include frameworks suited for UI, API, and unit testing across different platforms

- Test management: A centralized system will be used to manage test cases, plan executions, track coverage, and generate reports. This ensures traceability from requirements to test results.

- Bug tracking: An issue tracking system will support defect logging, prioritization, assignment, and resolution to maintain transparency and accountability throughout the QA cycle

- Continuous Integration & Testing: Integration with CI/CD pipelines will enable automated execution of tests with every build or code change, ensuring early detection of issues and smoother deployments.

6. Entry & exit criteria

The criteria serve as essential checkpoints to confirm that the product is ready for testing and meets quality expectations before progressing to the next stage.

Entry Criteria: Conditions that must be met before testing begins:

- Code is complete; only bug fixes are permitted

- Unit and integration tests have passed

- Core functions (e.g., login, navigation) are operational

- Test environment is properly configured

- Known issues are documented

- Test data is prepared and available

Exit Criteria: Conditions that must be fulfilled to conclude testing:

- All planned test cases have been executed

- Critical defects are resolved, and remaining issues are within acceptable thresholds

- Essential user workflows have been verified

- Test results and reports have been reviewed

- The build is stable and ready for deployment

7. Deliverables

Test deliverables represent the result of successful testing, derived from the objectives set earlier.

Outline the essential artifacts and documents that should be created throughout the testing process to track progress and communicate findings. While the test strategy provides an overarching view, it’s important to give a concise summary of the key deliverables, without going into excessive detail for each item.

8. Metrics

Establishing key performance indicators (KPIs) and success metrics is essential for evaluating the effectiveness and quality of the testing process. These metrics provide a shared understanding among team members, offering a common language for tracking progress and aligning goals.

Some commonly used testing metrics include:

- Test coverage: The percentage of the codebase that is covered by the test suite, indicating how much of the application is being tested.

- Defect density: The number of defects found within a specific code module, typically calculated as defects per thousand lines of code (KLOC). A lower defect density generally indicates higher code quality, while a higher value signals potential areas of vulnerability.

- Defect leakage: Defects that go undetected during earlier testing phases but are later discovered either in subsequent phases or after the product has been released.

- Mean time to failure (MTTF): The average amount of time that a system or component operates before experiencing a failure, used to measure the reliability of a product.

These metrics help quantify testing performance and guide teams in improving processes and maintaining high standards.

9. Risks

Identify potential risks and establish clear mitigation strategies or contingency plans in case these risks materialize.

Testers typically perform a risk analysis (calculated as the probability of occurrence x impact) to prioritize which risks should be addressed first.

For instance, if the team realizes that the timeline is too tight and they lack the necessary technical expertise to meet the objectives, this would be a high-probability, high-impact scenario. In such a case, the team must have a contingency plan in place, such as adjusting the objectives, investing in upskilling the team, or outsourcing to ensure timely delivery.

This document should be reviewed carefully by the business team, QA Lead, and Development Team Lead. Based on the insights from this document, detailed test plans can be created for individual sub-projects or for each sprint iteration.

Software Test Strategy Sample Document

| Section | Details |

| Product, revision and overview |

|

| Product history |

|

| Features to be tested |

|

| Features not to be tested |

|

| Configurations to be tested |

Excluded Configurations: Android versions below 10.0 and iOS versions below 13 are not included in this testing cycle |

| Environmental requirements |

|

| System Test Methodology |

|

| Initial test requirements |

|

| Test entry criteria |

|

| Test exit criteria |

|

| Risks |

|

| References |

|

Software Test Strategy vs Test Plan

Many people still confuse the difference between a software test strategy vs test plan . While both are important in the testing process, they serve different purposes.

The test strategy offers a broader, high-level view compared to the test plan. It sets the overall direction for quality assurance, and every test plan should align with the principles and guidelines defined in the test strategy.

Here’s a detailed breakdown of the two:

| Test Strategy | Test Plan | |

| Purpose | Outlines a high-level approach, objectives, and scope for testing the software project | Provides detailed instructions, procedures, and specific tests to be conducted |

| Focus | Testing approach, test levels, types, and techniques | Specific test objectives, cases, data, and expected results |

| Audience | Intended for stakeholders, project managers, and senior test team members | Intended for testing team members, test leads, testers, and relevant stakeholders |

| Scope | Covers the entire testing effort across the project | Focuses on a specific phase, feature, or component of the software |

| Level of Detail | More abstract, with less granular detail | Highly detailed, specifying test scenarios, cases, scripts, and data |

| Flexibility | More flexible, allowing for changes in project requirements | Relatively rigid and less flexible once the testing phase begins |

| Longevity | Remains stable throughout the project lifecycle | Evolves and adjusts throughout the testing process based on feedback |

Conclusion

Effective software testing strategies are essential for ensuring the success of your project. A solid test strategy in software testing helps prevent errors, improve efficiency, and provide high-quality results. Understanding what a test strategy is in software testing and reviewing a software test strategy sample can help you structure your approach effectively.

It’s important to understand the difference between software test strategy vs test plan – the strategy provides the high-level approach, while the test plan dives into the specifics. At LARION, we create tailored software testing strategies that align with your goals and help ensure successful project outcomes.

Ready to build a customized test strategy for your project? Contact LARION today for a free consultation