A survey by QualiTest Group revealed that an astonishing 88% of app users abandon applications due to bugs and glitches. This statistic underscores the critical importance of software testing in today’s competitive landscape, where user experience can make or break an application’s success.

In this blog post, we will take a deep dive into the world of software testing, exploring its various types, methods, and lifecycle.

What is software testing?

Software testing is the process of evaluating a software application to ensure its quality, functionality, and performance align with user expectations and requirements.

To conduct testing, testers execute the software under controlled conditions, simulating a variety of scenarios, environments, and user interactions. This approach allows them to identify any defects that may arise during the testing process.

Why is software testing crucial?

Some of the benefits of software testing include:

- Bug detection and prevention: identifies and resolves bugs or issues (architectural flaws, poor design, invalid functionality, security and scalability issues) early in the development process, reducing costs and time

- Improve product quality: ensures that the software functions efficiently across various platforms, devices, and environments

- Enhanced customer trust: High-quality software leads to frictionless digital experience and satisfied customers

- Compliance requirements: For industries where regulatory compliance is crucial (such as healthcare, finance, or aviation), meeting regulatory regulations and standards is crucial. Testing aids in ensuring that the software complies with industry regulations.

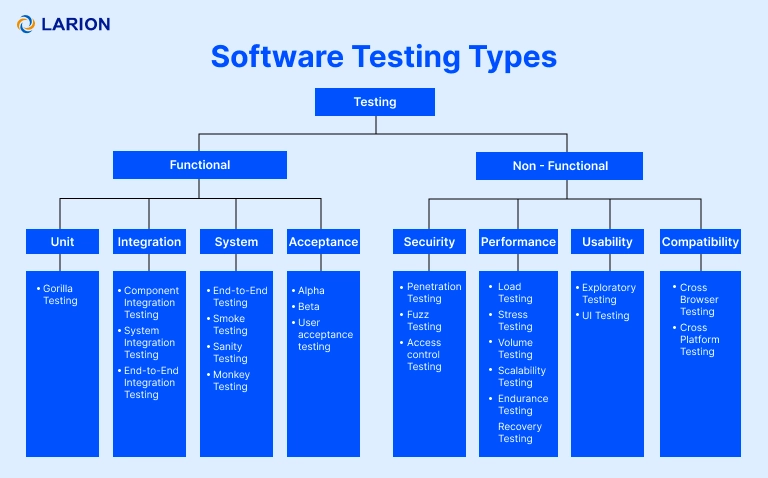

What are software testing types?

There are currently two main types of software testing that testers commonly apply

Functional testing

This type of testing focuses on verifying whether the application behaves according to specified requirements. It checks the system’s functions by feeding them inputs and examining the outputs to ensure they match the expected results. The primary goal of functional testing is to validate that each feature of the software operates correctly

This umbrella term include a variety of testing types, each designed to serve a specific purpose Here are the common types of software testing categorized under functional testing:

- Unit testing: tests individual units of code in isolation to ensure each is working as expected. A unit is the smallest testable component of any software, typically responsible for a specific set of functionalities. Frameworks like JUnit and TestNG are commonly employed to automate this testing process

- Integration testing: verifies that various modules or components work together correctly. Integration testing can be conducted using top-down, bottom-up, Big Bang, and incremental methods.

- System testing: once unit testing and integration testing are completed, system testing can be conducted to evaluate the entire software system as a whole and assess its compliance with the specified requirements.

- Acceptance testing: confirms the software meets the business requirements and is ready for delivery.

- Regression testing: checks that recent changes haven’t negatively affected existing functionality.

Non-functional testing

On the other hand, this testing type evaluates the performance, usability, reliability, and other attributes of a software application that do not relate directly to specific functionalities

Under non-functional testing, there are many testing types:

- Security testing: evaluates the software’s ability to protect against threats and vulnerabilities

- Performance testing: assesses the system’s speed, responsiveness, and stability under different conditions.

- Usability testing: measures how easy and intuitive the software is for users

- Compatibility testing: verifies that the software works across various platforms, devices, operating systems, and browsers.

The choice of which type of software test to utilize depends on the specific test scenarios, available resources, and business requirements.

What are common software testing methodologies?

Testing methodologies are specific techniques used to execute testing. They focus on the how of testing, providing guidelines for designing test cases, selecting test data, and evaluating test results.

Common testing methodologies include:

|

Parameter |

Black box | White box | Grey box |

| Focus | Tests the software’s functionality without looking at internal code or structure | Tests the internal workings and code logic of the application | Combines both black-box and white-box testing by having partial knowledge of the internal code |

| Knowledge required | No knowledge of internal structure | Detailed knowledge of internal structure | Limited knowledge of internal structure |

| Techniques |

|

|

|

| Test cases | Based on specifications (requirements) | Derived from code structure | Combination of specifications and code |

| Tools | Test automation tools (Selenium, JMeter) | Code coverage tools (Jacoco, SonarQube) | Both test automation and code coverage tools |

| Advantages | Independent of implementation, can be performed early in development | High code coverage, identifies defects at the unit level | Combines benefits of black box and white box, effective for complex systems |

| Disadvantages | May miss internal errors, limited to functional testing | Requires in-depth knowledge of code, time-consuming | Can be challenging to balance black box and white box aspects |

| Typical Use Cases | Functional testing, user acceptance testing | Unit testing, integration testing |

System testing, regression testing |

What are software testing approaches?

When choosing which types of software testing to implement, testers also have two approaches to consider: manual testing and automation testing.

In comparing manual testing to automation testing, no single factor dictates which approach is superior. The choice ultimately depends on the specific circumstances of each case.

Manual vs automated testing

Besides testing types characteristics, the decision between manual and automated testing

is influenced by factors such as project requirements, time constraints, budget, system complexity, and the skills of the testing team, as outlined below

| Criteria | Manual testing | Automation testing |

| Definition | Testers manually navigate through different websites or app features to uncover bugs, errors, and anomalies | Testers design frameworks and write test scripts that automate the user interactions needed for testing a website or application |

| Accuracy | Prone to human errors and inconsistencies, especially in repetitive tasks | Provides consistent results and reduces the likelihood of human error, ensuring higher accuracy |

| Scalability | Manual testing requires more time when conducted on a large scale | Highly scalable, as automated tests can execute across multiple machines simultaneously |

| Flexibility | More flexible in adapting to changes in requirements or application behavior. Testers can quickly shift focus based on observations | Less flexible since any changes in the application may require updates to the test scripts, which can be time-consuming |

| Maintenance | Easier to maintain in rapidly changing environments since no scripts are involved | Requires ongoing maintenance of test scripts to ensure they remain relevant as the application evolves |

| Team Skills | Requires an understanding of the application and testing principles | Requires technical skills to write and maintain test scripts, often necessitating knowledge of programming languages and testing frameworks |

| Investment | Lower initial costs but may become more expensive over time as more testing is required | Higher initial investment in tools and scripting but reduces long-term costs through efficiency and reduced human resources needed |

| Test reusability | Limited, since manual tests must be redone each time | High, because automated tests can be reused across various projects |

| Initial setup time | Minimal, as it doesn’t involve scripting or the need for tool setup | High, due to the requirement to create test scripts and configure tools |

When should you use automation testing?

- Numerous repetitive tests: Automation testing is ideal for conducting repetitive tests, particularly when dealing with a high volume.

- Limited human resources: In projects with only a few dedicated testers, automation becomes essential to ensure tests are completed within deadlines. This approach allows testers to concentrate on more complex issues that require their expertise, rather than being bogged down with basic, repetitive testing tasks.

Typical use cases for automated testing are regression, smoke, integration, load and cross-browser testing

When should you use manual testing?

- Flexibility is needed: manual testing allows QA professionals to rapidly execute and see immediate results. In contrast, automation testing requires a more extensive setup, consuming additional time and resources. Consequently, manual tests provide greater flexibility within the testing pipeline. However, for optimal effectiveness, automation becomes essential when tests need to be repeated with various inputs and parameters.

- Short-term projects: Given that automation demands a significant investment of time and resources for setup, it is often impractical to implement for short-term projects that focus on minor features or minimal code changes. The effort and cost involved in establishing the testing infrastructure would be excessive for such limited scope.

- End-user usability assessment: While automation can be highly effective, it cannot evaluate whether software is visually appealing or user-friendly. No machine can accurately determine if a website or app provides a satisfactory user experience; this requires actual human testers who can use their instincts and insights to draw meaningful conclusions.

As manual testing works best when cognitive and behavioral skills are essential for evaluating the software, testing types like exploratory, usability, UAT or ad-hoc testing are well-suited for this method.

Software Testing Life Cycle

Many software testing projects apply to a process referred to as the Software Testing Life Cycle (STLC). The STLC comprises six essential activities designed to ensure that all software quality objectives are achieved, as outlined below

1. Requirement analysis

In this stage, QA testers collaborate with stakeholders involved in the development process to identify and understand testing requirements. The insights gained from these discussions are compiled into the Requirement Traceability Matrix (RTM) document, which serves as the foundation for developing the test strategy.

Three key roles play a crucial role in this process:

- Product Owner: Represents the business side and aims to address a specific problem.

- Developer: Represents the development side and focuses on creating a solution to meet the Product Owner’s needs.

- Tester: Represents the QA side and verifies whether the solution functions as intended while identifying any potential issues.

Testers and developers must work together to assess the feasibility of implementing the business requirements. If the requirements cannot be fulfilled within the established constraints, limitations, or available resources, they must consult with the business side—either the Business Analyst, Project Manager, or client—to make necessary adjustments or explore alternative solutions.

2. Test planning

Following a comprehensive analysis, a software test plan is developed.

- Test objectives: Specify attributes such as functionality, usability, security, performance, and compatibility.

- Output and deliverables: Document the test scenarios, test cases, and test data that will be created and monitored.

- Test scope: Identify which areas and functionalities of the application will be tested (in-scope) and which will not (out-of-scope).

- Resources: Estimate costs for test engineers, manual and automated testing tools, environments, and test data.

- Timeline: Set expected milestones for test-specific activities, in conjunction with development and deployment schedules.

- Test approach: Evaluate the testing techniques (white box/black box testing), test levels (unit, integration, and end-to-end testing), and test types (regression, sanity testing) to be utilized

To maintain greater control over the project, software testers can incorporate a contingency plan to adjust variables if the project takes an unexpected turn.

3. Test case development

Once you have outlined the scenarios and functionalities to be tested, we can begin crafting the software test cases

Here’s the format for a basic test case:

| Component | Details |

| Test Case ID | TC021 |

| Description | Verify successful transaction |

| Preconditions |

The user has a valid account with the application. The user is logged into the application. The user has sufficient balance to cover the transaction amount. The recipient’s account is valid and active |

| Test steps |

1. Navigate to the transaction section:

2. Enter transaction details:

3. Proceed with transaction:

4. Review transaction:

5. Authentication:

6. Confirm successful transaction: Check that a notification appears, confirming that the transaction was successful, along with the transaction ID and relevant details |

| Test data |

Recipient Account: [email protected] Amount: 100 USD Transaction Description: Payment for services (optional) |

| Expected Result |

The application displays a confirmation message indicating the transaction was successful. The confirmation message includes the transaction ID and relevant transaction details. The user’s account balance is updated to reflect the deducted amount. The recipient receives a notification of the successful transaction (if applicable). |

| Actual Result | (To be filled after execution) |

| Postconditions |

|

| Pass/Fail Criteria |

|

| Comments |

|

4. Test environment setup

This step can be conducted simultaneously with test case development. A test environment refers to the software and hardware configurations used for testing the application, including the database server, front-end running environment, browser, network, and hardware.

For instance, if you want to test a mobile app, you’ll need the following:

- Development environment: This is where developers build, debug, and conduct early-stage tests on the mobile app:

– Mobile development platforms, such as Xcode for iOS and Android Studio for Android.

– Simulators and emulators for initial testing on various virtual devices.

– Local databases and API mock services for preliminary integration testing.

– Continuous Integration (CI) system to automatically execute unit and integration tests after each build.

- Physical devices: These are essential for identifying issues that simulators may not capture:

– A range of physical devices with different models, screen sizes, and hardware capabilities (e.g., iPhone 12, Galaxy S21, Google Pixel)

– Various iOS and Android operating system versions to ensure compatibility (e.g., iOS 14, Android 10, 11).

– Automation tools like Appium or Katalon Studio to facilitate testing across multiple devices.-

- Emulation environment: This allows for rapid testing across different devices and operating systems without requiring physical devices:

– Emulators for Android (via Android Studio) and simulators for iOS (via Xcode).

– Configurations that simulate various screen resolutions, RAM sizes, and CPU speeds to mimic different device conditions.

– Debugging tools integrated into development environments, such as those found in Xcode or Android Studio

5. Test execution

With clear objectives established, the QA team develops test cases, test scripts, and prepares the necessary test data for execution. Tests can be executed either manually or automatically. Once the tests are completed, any defects discovered are tracked and reported to the development team, who promptly addresses them.

During implementation, each test case progresses through the following stages:

- Untested: The test case has not yet been executed at this stage

- Blocked/On Hold: This status applies to test cases that cannot be executed due to dependencies such as unresolved defects, lack of test data, system downtime, or incomplete components

- Failed: This status indicates that the actual outcome did not match the expected outcome, meaning the test conditions were not met. The team will then investigate to determine the root cause

- Passed: The test case was executed successfully, with the actual outcome aligning with the expected result. A high number of passed cases is desirable, as it reflects good software quality

- Skipped: A test case may be skipped if it is not relevant to the current testing scenario. The reason for skipping is typically documented for future reference

- Deprecated: This status is assigned to test cases that are no longer valid due to changes or updates in the application. These test cases can be removed or archived.

6. Test closure

This is the final stage of the STLC, which involves the formal conclusion of testing activities after all test cases have been executed, bugs have been reported and resolved, and the exit criteria have been met. The primary goal of test closure is to ensure that all testing-related activities are complete and that the software is in optimal condition for release. Importantly, test closure should also encompass the documentation of the testing process.

The following activities are included in the test closure phase:

- Test summary report: A comprehensive test report is created, containing vital information related to test execution, such as the number of test cases executed, statistics on pass/fail/skip outcomes, testing efforts and time metrics, the number of defects identified, resolved, and closed, as well as any open issues that will be considered known defects in production. This report serves as a record for all stakeholders and can be referenced in the future.

- Defect management: All issues identified during execution are tracked and maintained in a defect management tool, making them accessible to all stakeholders.

- Evaluating exit criteria: The exit criteria defined in the test plan are revisited and reviewed by the testing team to ensure that all criteria have been met before releasing the software into production.

- Feedback/Improvements: Any challenges or negative experiences encountered during the testing process are analyzed and addressed. Feedback collected from the testing team is used to implement improvements, enhancing the efficiency of future testing processes.

- Sign-off: Finally, the test closure is formally signed off by project managers and other key stakeholders to confirm that the testing phase has been satisfactorily completed and meets the defined criteria. This signifies that the testing activities have been reviewed and approved, and the software is ready for production launch.

Popular software testing models

Software testing models provide a structured framework for organizing and executing testing activities. They outline the sequence of testing phases, the relationships between them, and the deliverables associated with each phase.

Here are some of the most commonly used testing models

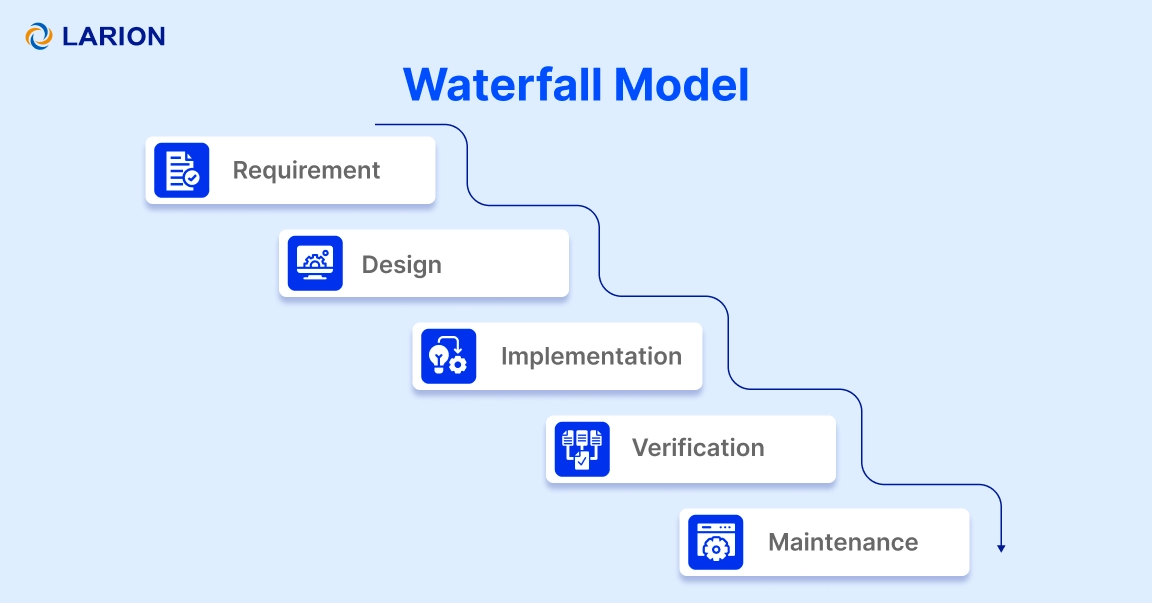

Waterfall model

Key Characteristics:

- Sequential Process: Each phase must be completed before moving to the next. Testing occurs after the development phase is finished.

Pros:

- Simple and easy to manage due to its linear nature.

- Works well for smaller projects where requirements are well understood upfront.

Cons:

- As testing is performed after the coding stage, which may lead to late detection of defects => expensive and time-consuming to fix.

- Inflexible to changes once the project progresses past the requirement phase.

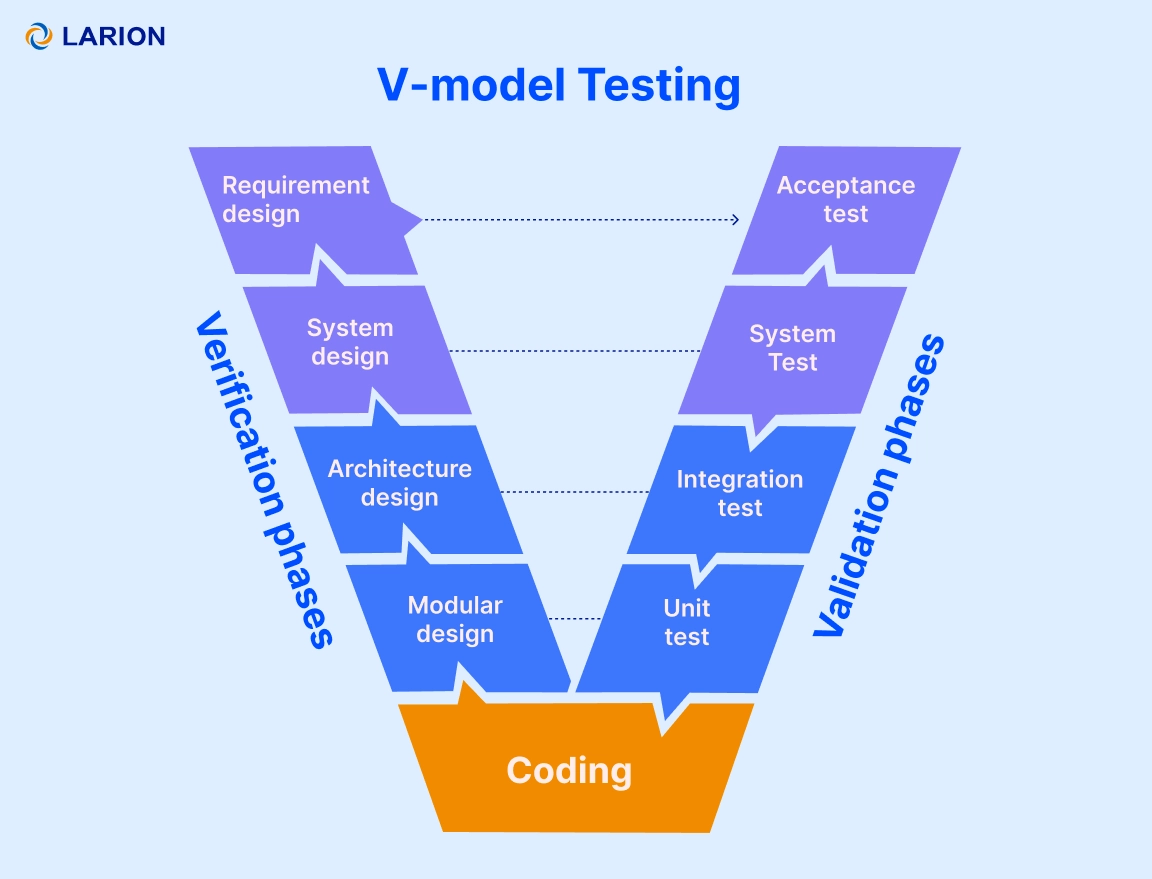

V-model testing

In the Waterfall model, QA teams waited until development was finished to start testing, leading to limited time for fixes and poor product quality. The V-model solves this by involving testers throughout the development process

Key characteristics:

- Parallel testing: Testing is done simultaneously with development. For example, unit tests are planned during the coding phase, integration tests during system design, and so on

- Verification & validation: Verification involves checking whether the software meets specified requirements (planning), and validation involves testing the final product (execution)

Pros:

- Defects are identified at earlier stages due to the parallel nature of testing and development

- Testing and development activities are aligned, improving traceability and defect prevention

Cons:

- Similar to the Waterfall model, it’s rigid and doesn’t handle changes well once development starts

- Not ideal for projects with frequently changing requirements

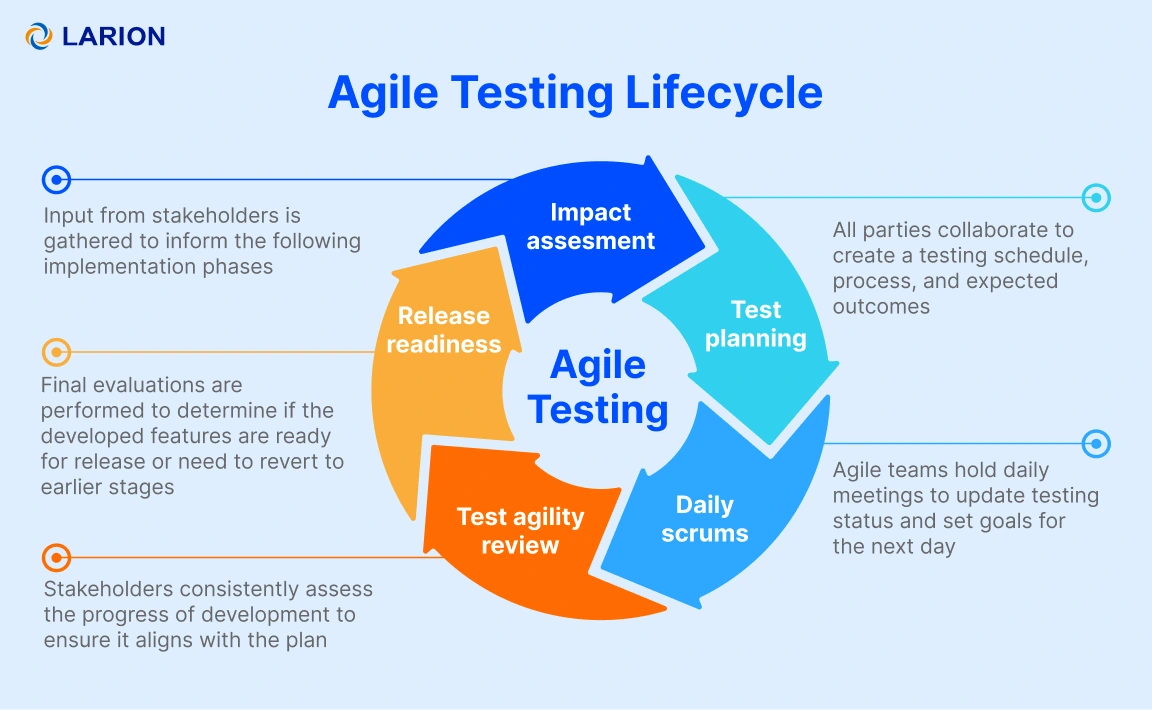

Agile testing

Agile is an iterative and incremental model where development and testing occur in small, manageable iterations or “sprints.” The Agile model has introduced practical methods for faster, high-quality software delivery, with Test-Driven Development (TDD) and Behavior-Driven Development (BDD) being two popular practices that improve code reliability and team collaboration.

Key characteristics:

- Continuous testing: Testing is done continuously, in every iteration, rather than being a separate phase.

- Collaboration: Testers, developers, and business stakeholders work closely together, ensuring that feedback is incorporated in real time.

- Frequent releases: Regular releases at the end of each sprint ensure that testing focuses on small, manageable changes.

Pros:

- Highly flexible, adapting to changes in requirements and scope.

- Testing early and often helps identify defects quickly, reducing the cost of fixing issues.

Cons:

- Requires more communication and coordination between team members.

- Can be difficult to manage if not implemented correctly, leading to scope creep.

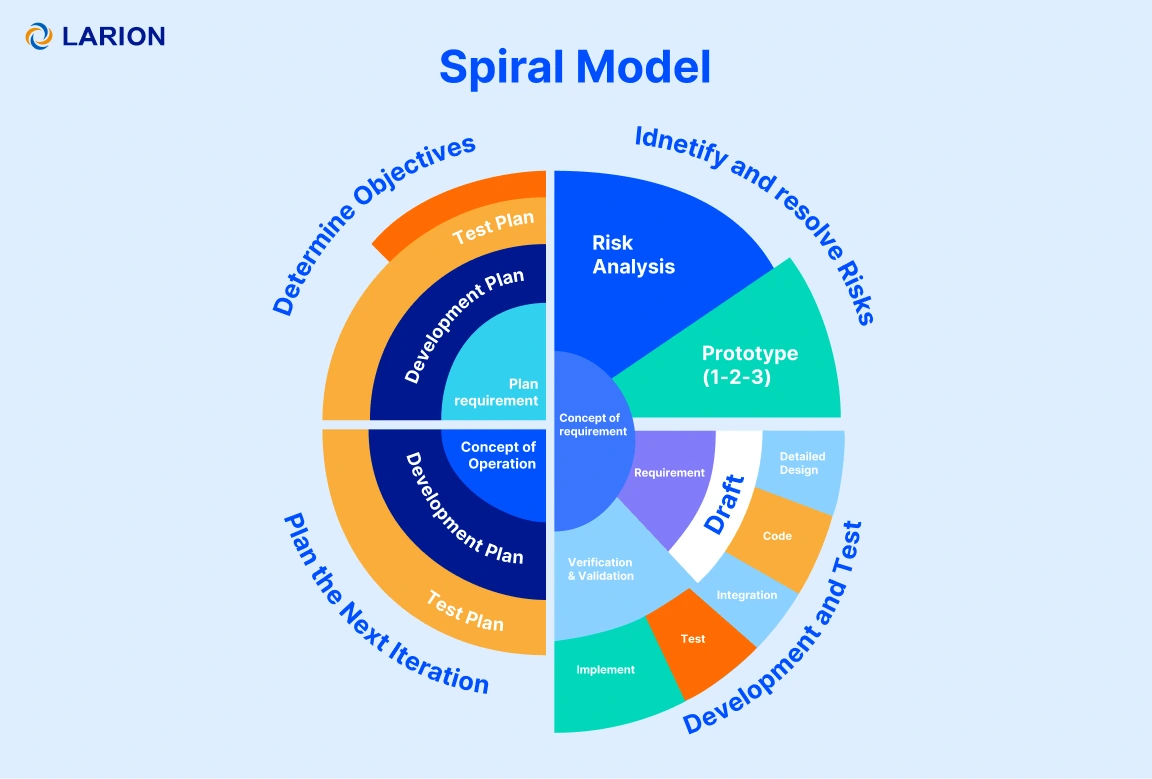

Spiral model

The Spiral model combines elements of both iterative and Waterfall models. It emphasizes risk management and is used for large, complex projects where high risks are involved.

Key characteristics:

- Risk assessment: Each phase starts with identifying and mitigating risks.

- Iterative nature: The model cycles through planning, risk analysis, engineering (coding and testing), and evaluation repeatedly, adding features in increments.

Pros:

- Focus on risk management makes it suitable for high-risk projects.

- Testing is integrated at multiple levels, providing continuous feedback loops.

Cons:

- Complex and expensive to implement.

- Requires careful risk analysis, which may not be feasible for smaller projects.

What’s ahead of software testing?

Recent years witnessed the rise of AI in software testing, promising enhanced efficiency, accuracy, and speed in software testing. AI-powered tools are being applied across various areas, including test case generation, defect prediction, synthetic data creation, self-healing automation, visual testing, and UI validation.

As AI technologies continue to evolve, we can anticipate a shift toward autonomous testing in the coming years. This advanced approach will enable tests to be entirely created, executed, and managed by AI and machine learning, significantly reducing the need for human intervention and paving the way for a new era of automation in software testing.

How to enhance your software uality?

Effective software testing is crucial for ensuring that your solutions meet the growing expectations of today’s digital-savvy users. With a variety of testing types—such as functional, performance, and security testing—and different methodologies, including manual and automated approaches, navigating this landscape can be challenging. Moreover, choosing the right testing model, whether Agile, Waterfall, or DevOps, plays a significant role in the success of your project.

Having an experienced tech partner that provides in-depth insights and advice on testing types, methods, models, and approaches specifically tailored to your project needs is invaluable. Our team of testers has guided companies across various industries to accelerate their testing processes and maintain the highest quality standards.

Book your consultation with us today and empower your team with the knowledge and support needed to achieve exceptional software quality and reliability!